In this article, we will look at how Apache Kafka testing performed, which is a distributed streaming platform.

What is Apache Kafka?

Apache Kafka is an open-source distributed event streaming platform that:

- Publishers and subscribers to streams of records, similar to a message queue or enterprise messaging system.

- It processes streams of records as they occur or are needed.

- It is used by several large companies for high-performance data pipelines, streaming analytics and data integration applications.

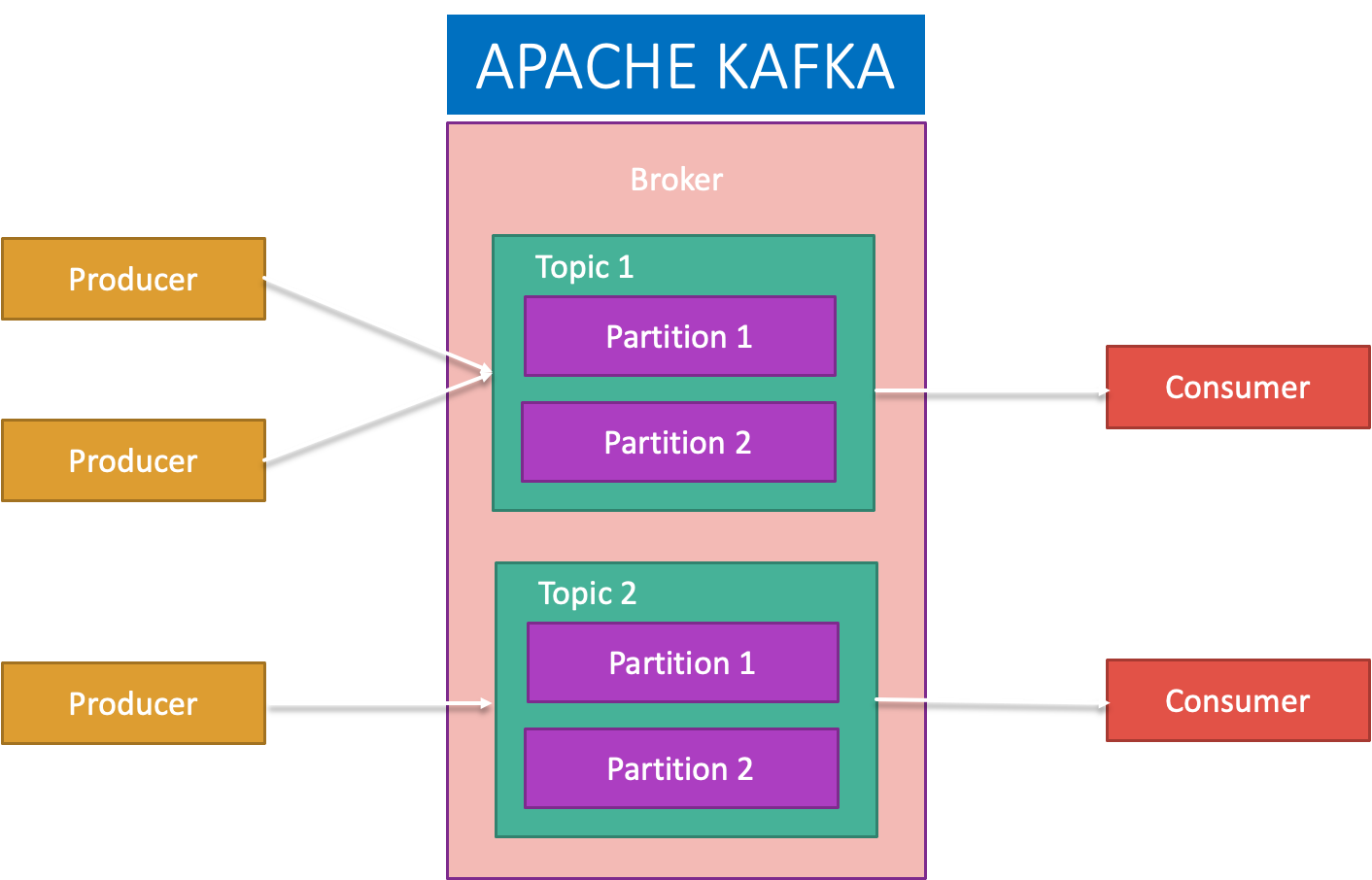

- Kafka is run as a cluster on one or more servers that can span multiple data centers.

- The Kafka cluster stores records/data in categories called topics. All these record consists of 3 main pieces of information i.e. key, a value and a timestamp.

Apache Kafka

Top-ten largest companies using Kafka, per-industry

- 10/10 Largest insurance companies

- 10/10 Largest manufacturing companies

- 10/10 Largest information technology and services companies

- 8/10 Largest telecommunications companies

- 8/10 Largest transportation companies

- 7/10 Largest retail companies

- 7/10 Largest banks and finance companies

- 6/10 Largest energy and utilities organizations

Prerequisites before you start Kafka Testing

Before you start Kafka testing, follow 5 steps simple process to gather information:

- You should have access to the test environment. In some cases, you can access the information from Splunk and/or Confluent and/or GCS Bucket on GCP etc.

- Creation of test data either from the front-end or back-end.

- Find out where does topic resides.

- What are the types of messages or records written or produced on the topic?

- What happens when messages are consumed by the listeners?

Testing Approach

With the end-to-end testing approach, we can validate the producer, consumer and application processing logic. Once you get confidence in the lower environment then SIT can be done to do full system validation.

Steps for validation

- Identify a test scenario

- Create or produce the data for the identified scenario and validate it.

- Use or consume the data and validate it.

- With the help of HTTP REST or SOAP API validation becomes easy and much more stable.

What Do You Think?

Did this work for you?

Could I have done something better?

Have I missed something?

Please share your thoughts using comments on this post. Also, let me know if there are particular things that you would enjoy reading further.

Cheers!